Matrix Multiplication

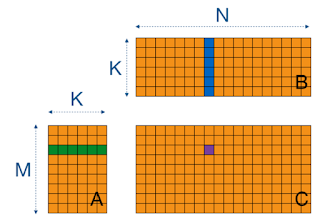

Matrix Multiplication is a simple but requires many computing resources. When Computing C = A x B, to calculate a element of C, we need to dot product of the row vector of A and the column vector of B. Since this has to be performed for all elements, it may take a considerable amount of time if the size of the matrix is large.

Matrix multiplication is an operation suitable for parallelization and is a good example of GPU acceleration. Of course NVIDIA supports matrix multiplication as library, but writing a GEMM(General Matrix Multiplication) code helps to understand how cuBLAS or CUTLASS works.

Naive implementation

__kernel

void matrix_multiplication1(const int M, const int N, const int K,

const __global float* A,const __global float* B, __global float* C ) {

const int globalRow = get_global_id(1);

const int globalCol = get_global_id(0);

float localsum = 0.0f;

for (int k = 0; k < K; k++) {

localsum += A[globalRow*K+k]*B[N*k + globalCol];

}

C[K*globalRow + globalCol] = localsum;

}Above code is naivley implemented openCL code, with poor performance considering only many threads compared to CPU. In GPU, the global memory access time is huge, so you can consider using local memory(shared memory) to improve performance.

Implementation with Share Memory

__kernel

void matrix_multiplication2(const int M, const int N, const int K,

const __global float* A, const __global float* B, __global float* C) {

const int row = get_local_id(1);

const int col = get_local_id(0);

const int globalRow = TS * get_group_id(1) + row;

const int globalCol = TS * get_group_id(0) + col;

__local float Asub[TS][TS];

__local float Bsub[TS][TS];

float localsum = 0.0f;

const int numTiles = K / TS;

for (int i = 0; i < numTiles; i++) {

const int tiledRow = TS * i + row;

const int tiledCol = TS * i + col;

Asub[row][col] = A[globalRow*K + tiledCol];

Bsub[row][col] = B[tiledRow*N + globalCol];

barrier(CLK_LOCAL_MEM_FENCE);

for (int k = 0; k < TS; k++) {

localsum += Asub[row][k] * Bsub[k][col];

}

barrier(CLK_LOCAL_MEM_FENCE);

}

C[globalRow*N + globalCol] = localsum;

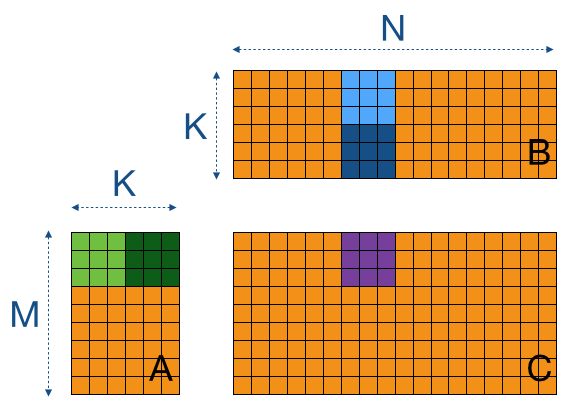

}In order to use the shared memory efficiently, it is recommended to use the data as much as possible once it is loaded. Since the size of shared memory that can be used per block is fixed, tiling is performed.

In addition, various methods can be considered to maximize GPU resource utilization, such as increasing the workloads per thread or transposing matrix B.

OpenCL matrix-multiplication SGEMM tutorial (cnugteren.github.io) introduces more methodologies and experiment results.

'GPU & CUDA' 카테고리의 다른 글

| Sparse Matrix Format (0) | 2021.07.06 |

|---|---|

| CUDA (0) | 2021.06.25 |

| GPU Architecture (0) | 2021.06.20 |

댓글